Developing Regroove - Part 1

January 07, 2022

Ever since the first sounds of primitive percussive instruments echoed off cavernous walls, human beings have been entranced by rhythm. Even though our ancestors practiced rhythmic rituals for thousands of years, it remains very hard to define what is meant by an understanding of rhythm. Rhythm is something we feel, a mark of human spontaneity that is simultaneously so obvious through experience, yet very hard to define by way of intellectual endeavours. We are now at a point where the use of large scale data collection is steering research towards the use of neural networks to create a universal representation of rhythm.

Built on the shoulders of this research is Regroove, a Max for Live device for generating endless rhythmic variations and syncopations on any input pattern. We wanted to avoid making yet random pattern generator so a focus has been placed on interactivity. After starting with a basic rhythmic idea, Regroove will assist and guide you to finding exactly the groove and feel that you want. In this first article, we will talk about how Regroove has been taught to understand rhythm and how we went about designing an interface for effective human-machine musical co-creation.

How it works

How does Regroove take your input rhythm and generate musical syncopations and variations?

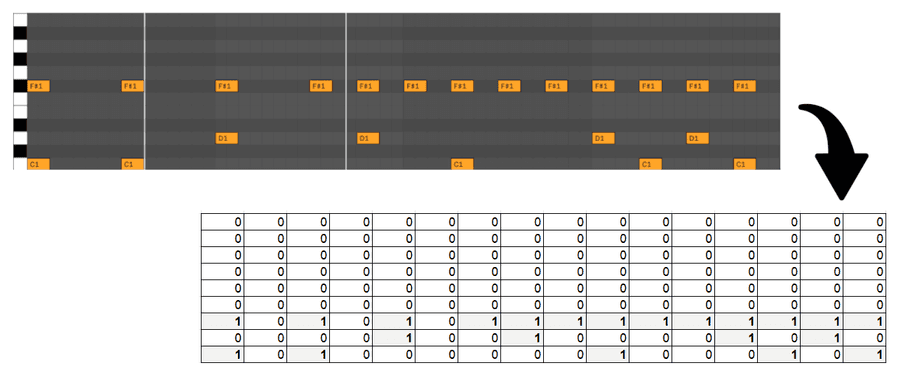

The first assumption we are making is about the inputs and outputs of the embedded neural net. For example, the MIDI pattern below would be interpreted by the model as a matrix of values. In this case, the kick drum is the first instrument, the snare drum the second, and a closed high hat the third.

Now, assume we have chosen a neural net that is very good at understanding the relationship between an input matrix and an output matrix. How should we show it rhythm data for it to learn exactly which variations of an input rhythm would be most appropriate to keep the groove going? Let’s discuss two undeniably important details before we answer this question.

First of all, the data is in symbolic format, for example, MIDI. It would be great to interpret raw drum audio directly. However, this kind of feature would require another neural net that is trained specifically for this task (drum transcription). Definitely possible for the future, unfortunately this is quite time consuming—any volunteers?

Secondly, the data used to train the neural net is strictly limited to drummers playing an electronic drum kit, for example, the Roland RT-30. This means the patterns generated will always be in the style of a drummer playing a 9-piece drum kit. Obviously, you can direct the MIDI output stream to another instrument but the results will be very much recognizable as patterns that would probably sound better on a drum kit.

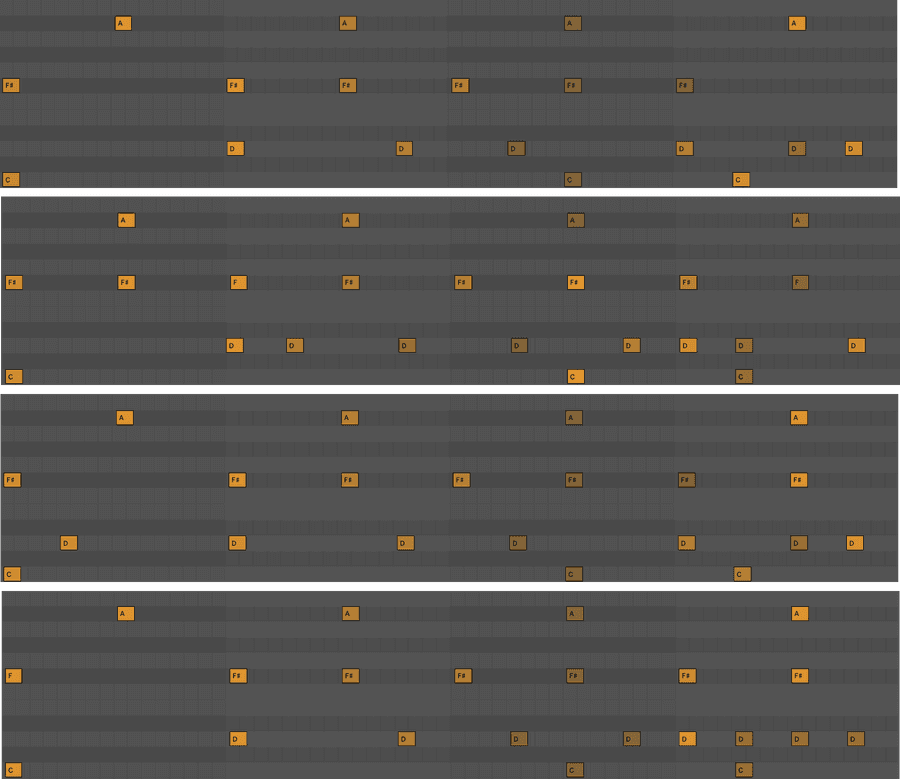

Although each individual bar has slight variations and syncopations compared to the others, the assumption we are making is that a drumming style remains consistent throughout a measure.

The ability to learn from large amounts of data is exactly what has made deep learning so successful in a wide assortment of tasks. In our case, the secret to learning how to syncopate input rhythms lies in the similarity between each individual bar making up a measure of drumming. Although each individual bar has slight variations and syncopations compared to the others, the assumption we are making is that drumming style remains consistent throughout a song. We can leverage this assumption by teaching the algorithm that, given a one bar input pattern, the output can be any other bar in the song.

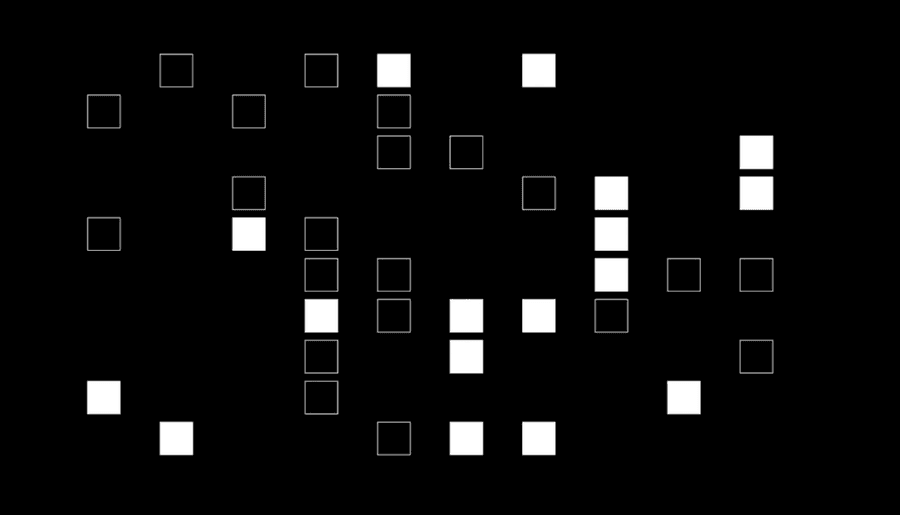

To give you an example, listen to the break generated by Regroove above. Now have a look at the visual representation below. You probably heard that the overall feel of the beat is consistent, however you can also hear (and see) that the notes and dynamics change ever so slightly. If you were to listen to the first bar of music, with your existing knowledge of rhythm, you could probably come up with some similar variations.

Understanding how the input and output data have been designed to teach a neural net is the single most important detail for you to be able to effectively work with Regroove. The way the actual neural net is engineered to be able to acquire a complex representation of rhythm is beyond the scope of this article and quite frankly, irrelevant to the music making process.

Treating neural nets like black boxes is not uncommon, most of the time even researchers don’t understand the dark magic that leads to such amazing results. Most importantly, you should now understand how we can teach a sufficiently complex algorithm to generate musically related rhythms based on any input rhythm.

Designing the Interface

The space for co-creation with AI is still very new, which means that the rules for creative collaboration with neural nets are still unwritten. Generally, in designing Regroove, we’ve drawn analogies to how you would interact with the drummer in your band. An ideal algorithm would be able to interpret an idea and, together with responding to other instruments in the band, autonomously play with the groove. Unfortunately, Regroove is not there yet. It follows that we have designed the interface to encourage interactivity. Regroove presents three points of interaction with the rhythm engine, the engine configuration, syncopation sontrol, and the input pattern.

Input Pattern

The input pattern is the most intuitive way to communicate your rhythm preferences to Regroove. To draw an analogy, if you wanted to tell the drummer in your band what style of rhythm to play, the easiest would be to show her an example. This is very powerful if you know what kind of rhythm you want. Most of the time, however, we will only have a rudimentary idea of that rhythm. Fortunately, as long as the parameters passed into the rhythm engine are appropriate, Regroove handles ideas very well.

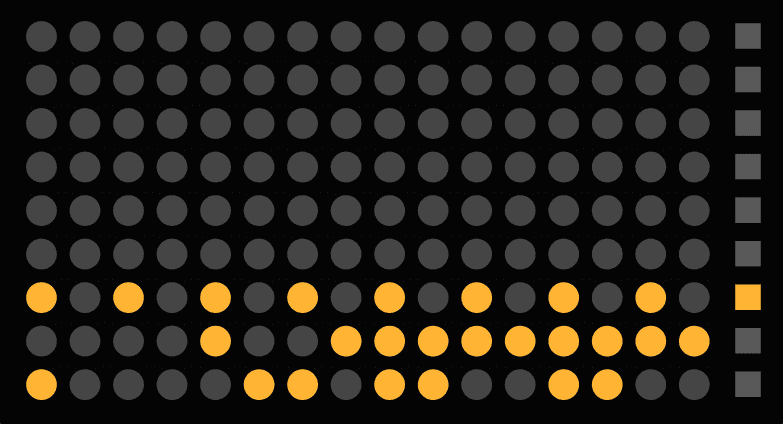

Regroove tries to make it as easy as possible for users to keep tight control over the rhythm being played. You always have the ability to manually activate and deactivate notes in the pattern matrix. If you have a MIDI pattern at your disposal, just drop it in the Ableton MIDI track containing Regroove and trigger the clip. Assuming the right MIDI notes are being triggered, Regroove will automatically fill the pattern matrix with the right notes.

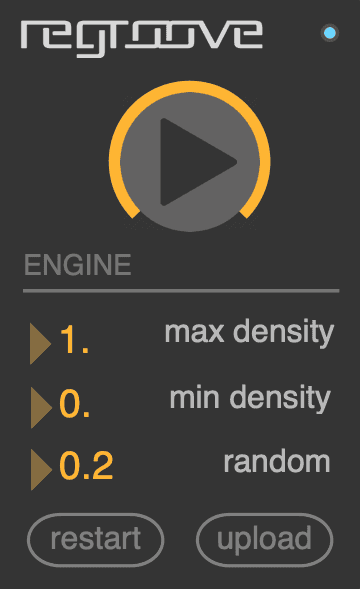

Engine Configuration

The engine configuration consists of the variables that are passed into the neural net to control the generated rhythms. This is different from real-time control in that these parameters set constraints on the total output space of rhythms the engine can generate. Currently, the neural net parameters are:

Minimum and maximum note density control how many notes are played per bar, in other words how dense the pattern is. More specifically, the minimum and maximum note density form the lower and upper bound of the density of the patterns generated, respectively.

Random controls the overall variation of the output patterns from the input pattern. If you’re happy with the input pattern, you probably don’t want the output patterns to vary too much. Use a low random value in this case. If you only have a rudimentary idea and want the neural net to help you explore a wider space of patterns, use a higher random value.

Samples control the total size of the output space of patterns. If you want the engine only to generate a few variations on the input pattern, keep this value low.

Syncopation Control

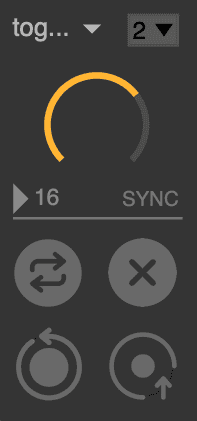

Without the ability to trigger syncopations and variations of the currently playing rhythm, we won’t get very far. Regroove provides three different syncopation modes appropriate for distinct scenarios.

snap mode triggers a new rhythm everytime the syncopate button is pressed. This mode is very useful if you just want to explore the output rhythm space.

toggle mode triggers a new rhythm as long as the syncopate button is being pressed, when the button is released we return to the original pattern. This is our favorite mode as it gives you the most immediate control over how we want to inject syncopations and variations.

auto mode triggers a new rhythm automatically based on an 1, 2, or 4-bar interval which can be defined in the same pane. This mode is a nice hands-off approach to let the engine just do it’s thing.

Outlook

There’s still a lot to be done on this journey to a fully autonomous algorithmic drummer that can groove!

Expressive rhythms

Scores provided to musicians only exist to give them an idea of what the pattern of notes should sound like, during a performance this pattern is filled with much more nuanced timing and accentuation. To be specific, by intentionally modulating the velocity and microtiming of the notes played in a pattern, a feeling of groove arises.

The next version of Regroove already contains a neural net which has learned to do exactly this. That is, given a drum pattern, the groove engine knows exactly the various dynamics and microtiming patterns used by drummers in the training data to amplify the groove. In addition, just like the groove pool in Ableton, you will be able to fine-tune the amount of dynamics and microtiming according to your liking!

Drumming Style

Currently, Regroove has only been trained on a very broad dataset of rhythms coming from drummers each playing their own style for different genres. In the future, it will be very interesting to be able to use a rhythm engine which has been specifically tailored for a specific genre or even a drummer’s specific style. Just like most machine learning problems, the main limitation here is data availability. If you happen to be a drummer reading this and you’re interested in a collaboration, hit me up!

Thanks for reading and I hope you enjoyed this article. Most of all I hope it has inspired you to try out Regroove and evaluate whether collaborating with an intelligent rhythm engine will help you find the groove. If you’re interested in trying out the expressive rhythm model or have any other general feedback please do so by tweeting at me or sending a message via the email link provided in the footer below.